|

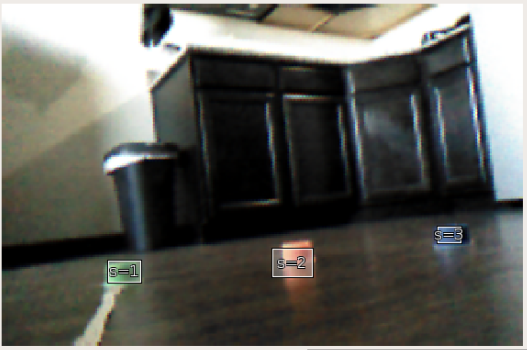

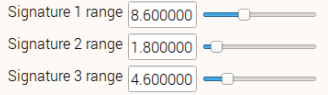

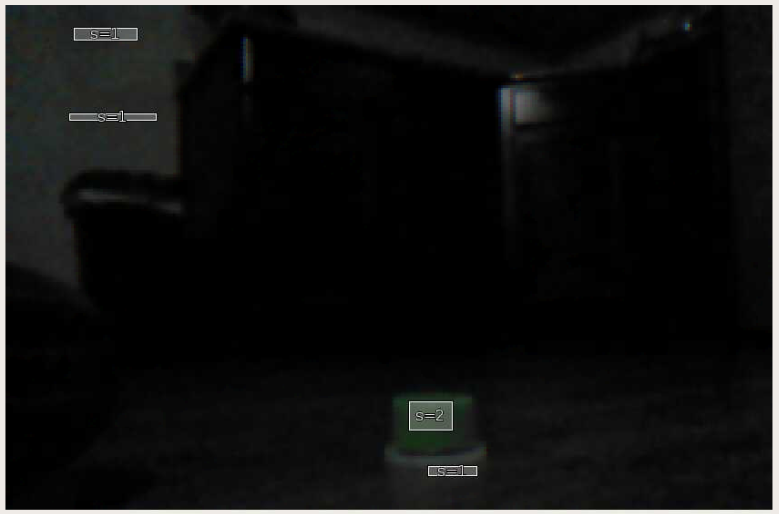

My initial tests of the Pixy2's object recognition were disconcerting. The camera's color-based object recognition seemed to be very light dependent, and prone to false positives. I had hoped that with more even lighting, and the consistent background of the arena, the recognition would be more consistent. The Pixy2's sensitivity can be set, and I tried adjusting that from its default value. This seemed to produce more accurate results without the false positives I had seen previously. The object was still recognized at the maximum 1.5 meter distance of the arena, but the recognition was lost beyond that distance. In Tidy Up the Toys, the colored blocks start at a distance of 1.1 meters from the robot. At this distance, the object was reliably recognized. In lower light conditions, the object was still recognized, although sporadic false positives appeared. There were no false positives with similar objects of different colors. The Pixy2 can store 7 different objects. After training the camera on each of the three different colored objects, it was able to recognize all three. I found that it was necessary to train the Pixy2 in a well lit environment. This seemed to give the camera a better understanding of the objects' shapes, allowing the Pixy2 to still recognize the objects when the lighting was worse. I also found that different colors required different sensitivities to avoid false positives. -Colin

We started off with the idea that we would try to progress with our bot as normally as possible. Unfortunately, the current situation the world is dealing with has put that to a halt. Currently New Mexico is in another shutdown as of November 16th, 2020. This has decreased the availability of components and shipping, along with the fact our team has yet to be able to meet up in person. We have continued to meet up weekly online to visit and talk about ideas and work on problems together.

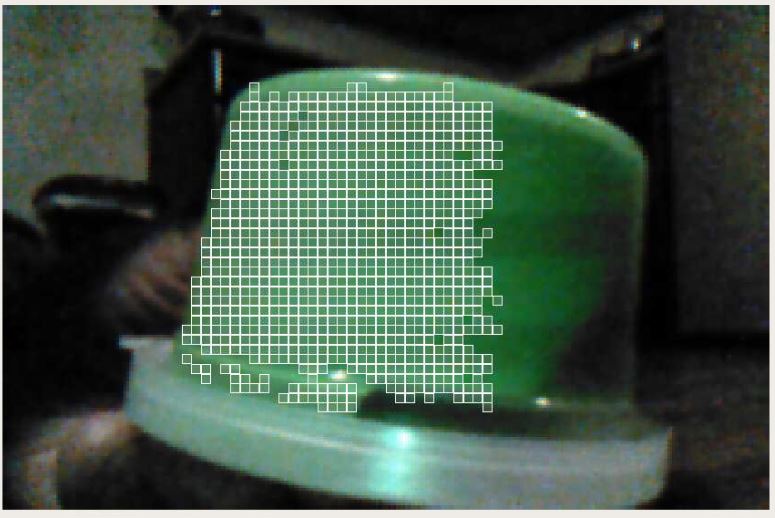

Currently Colin is working on object recognition, Jimmy is working on voice recognition to hopefully allow the bot to have a different way to be controlled. Joseph is continuing to try and refine the bot’s armature and grappling claw. I am looking into new ways to remotely control the bot itself. We are continuing to do this with our personal Dexter robots, as we only have one competition robot. The plan is hopefully we should not have many problems transferring over what we have learned with the Dexter bots to the competition robot. -Rob The Pixy2 uses color to recognize objects, and returns the coordinates of a bounding box around those objects, similar to the TensorFlow algorithms. In the Pi Wars challenges, the toy blocks and fish tank should be distinctly colored objects against a non-colored arena wall, so the Pixy2 seems like a promising option. The Pixy2 is trained by holding a colored object in front of the camera. The camera uses a region growing algorithm to find the connected pixels in the image that make up the object in front of the camera. It seems to use the dominant color of the object to decide which pixels are part of the object. The more pixels that the Pixy2 is able to detect, the more accurate its understanding of the object's shape will be. Once the Pixy2 has been "trained" to detect the object, it can then identify the same object in front of it. This is where the big limitation of the Pixy2 appears. Color is inherently tied to light. The Pixy2 seems to have poor light sensitivity in general, which makes it difficult to use in rooms that aren't well lit. Even when the object is still clearly visible in the camera's image, it's not always detected. Why? Because the difference in lighting has changed the saturation of the object's color, such that the object no longer matches the color that the Pixy2 was trained to recognize. Adding more light can cause a similar problem. Will this make the Pixy2 unusable? Possibly. We plan to run the challenges in a well lit space, with relatively consistent lighting. Hopefully the combination of consistent lighting and consistent arena walls will mitigate these problems.

-Colin As PiWars comes closer, CNM HackerSpace has registered and been accepted in the intermediate category. A good portion of the team are new to PiWars and we have varying degrees of experience in programming and design. To get the team onto a similar level we have borrowed a few Dexter GoPiGo 3s to learn how various sensors and motors work.

Our first task as a team was to get our Dexter robots built and moving. Once we all had our robots moving, we started attaching sensors and getting our robots moving based on input from those sensors. The first sensor we messed with was the GoPiGo distance sensor. Below is an image of one of our experiments using that sensor. Once we got the distance sensor working we added a servo to make distance sensor turn in both directions so we can see which direction is the better direction to go as seen below. -At the beginning of Fall Semester 2020 CNM’s PiWars competitive robotics team faces a major hurdle. We are attempting to recreate what has been done in a social setting; remotely. Not only has the current situation created issues with meeting up remotely to work collectively, but we needed to figure out how to share one robot between four team members.

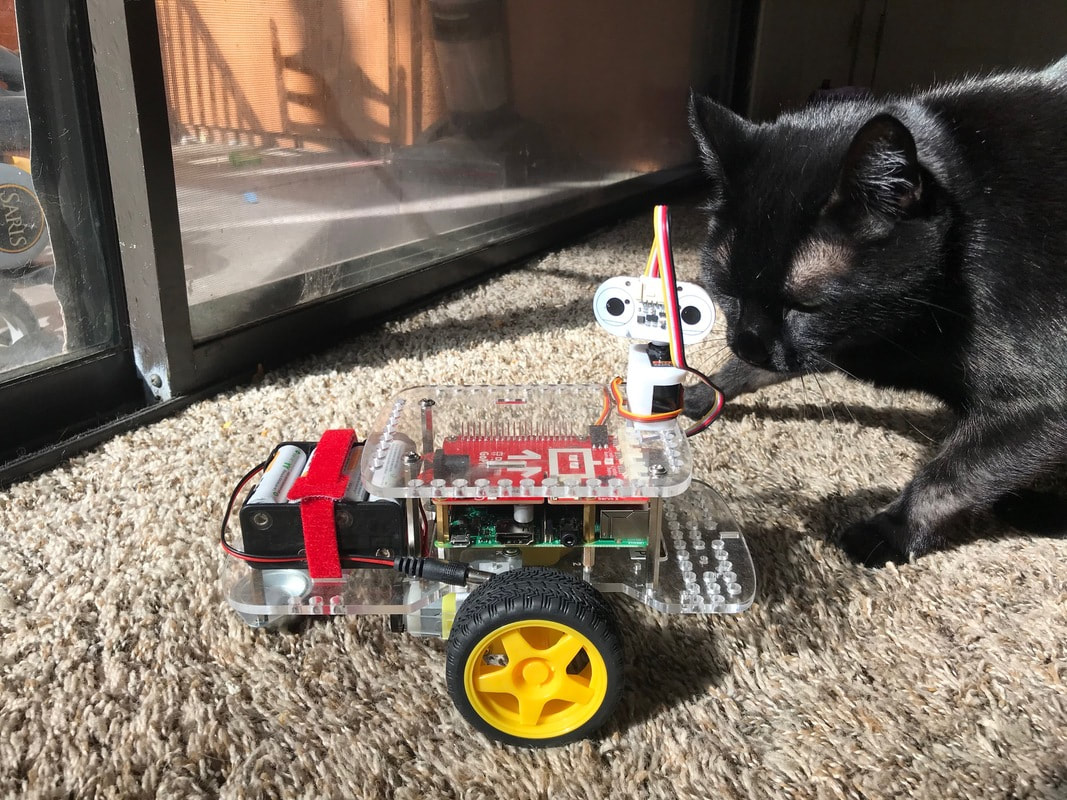

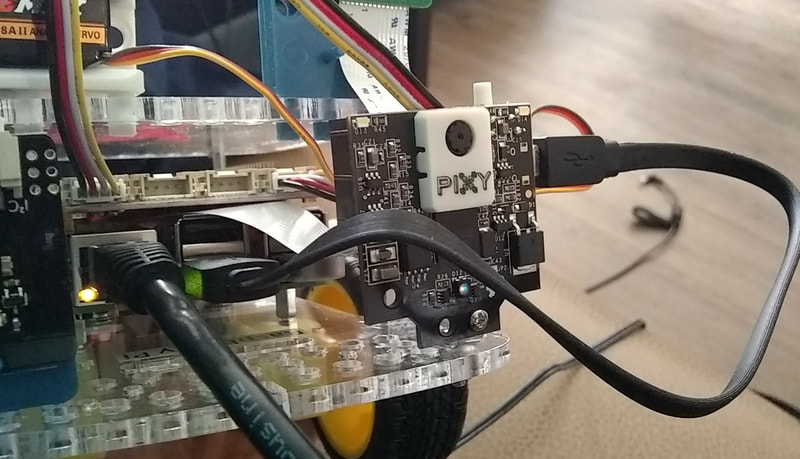

The current fix for this is Kerry Bruce, CNM instructor and one of the heads of CNM Hackerspace, supplying us with four different Dexter robots to practice on while we discuss what the collective plan is for the team bot. Luckily, we will be using an existing robot built for last year’s PiWars competition by the previous team. This year’s team does consist of two previous PiWars team members, with two newcomers. Jimmy Alexander has the competition robot and will be leading the way on the team’s bot. Most weekly team discussions have consisted of talks about what to change on the competition bot deemed HAL 4.0 to try and polish it up from last year’s iteration. When not talking about what to change on the bot, the team members are also working with the dexter bots to practice and play with the programming features of certain sensors that we feel will be necessary to HAL 4.0 -Robert As a potentially easier alternative to TensorFlow, we decided to try out the Pixy2 camera. This camera has built-in object recognition, similar to TensorFlow, using color. The PiWars "toys" and "fish tank" can be colored objects, set inside a white arena, so identifying them should be doable.

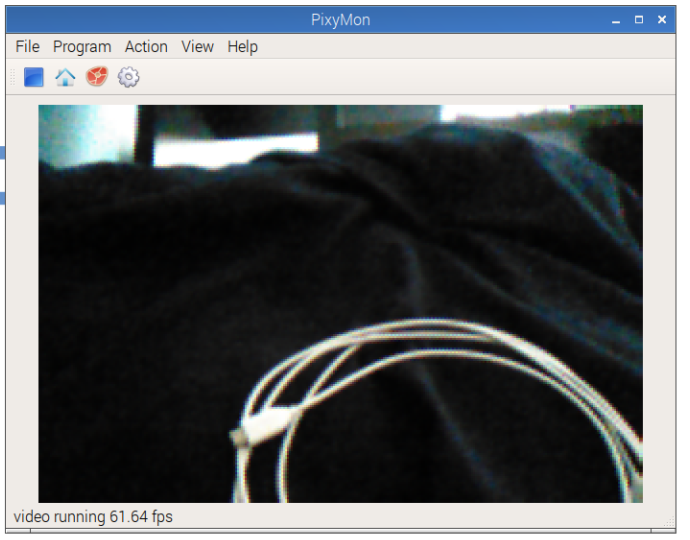

I attached the Pixy2 PCB to the front of the Dexter robot, and plugged it into the Pi via USB. The LED on the Pixy2 turned on, and I followed the provided instructions to install the Pixy2 driver libraries and dependencies. I tried running the provided test program, but pixy.init() failed, suggesting that the camera wasn't connected properly. The provided PixyMon application can be used to auto-detect the USB Pixy2. I tried connecting the Pixy2 to PixyMon on my computer, and it still failed. I then tried swapping out the USB wire, and the camera connected! -Colin How can our robot understand what it's looking at? How can it search for, say, a colored block, or the "fish tank"? One possibility is to use a camera with some sort of object recognition. I currently have a Pi Camera Module connected to my test robot, and I was wondering if there's a simple way to use that to identify objects. I searched around a bit on YouTube, and found some examples of object detection, using neural networks to classify objects in the camera's image frame.

Most of the examples I found used the open source library TensorFlow Light to run an object classifier. TensorFlow Light is a less resource-intensive version of the TensorFlow library, and is more suitable for devices like the Pi. This method of object detection supposedly works on any camera with adequate resolution. But, how do these neural network classifiers work? As I understand it, TensorFlow uses a particular classification algorithm, called a Deep Neural Network object detection algorithm. This is a module that can be trained to search for a particular type of object, and then draw boxes around each instance of that object it sees in the image. The algorithm is trained by giving it a large collection of training pictures, and another collection of test pictures. For each training picture, you have to manually draw a box around the object in that picture, and feed that information into the algorithm, along with the image. The neural network will then incrementally adjust itself, over and over, to find patterns between the training images. It will then look at the test images, and try to find that same object in those. You also draw identifying boxes in the test images, so the algorithm can see how accurate it was. You keep running the algorithm over the training and test images until its test-image accuracy reaches an adequate level. At that point, the values of the neural network's connections can be saved as a classifier file, and loaded onto the Raspberry Pi for use in the robot. In our case, if the arena floor and walls are a uniform color that's distinct from the objects, then it should be relatively easy for the classifier to identify the objects. We could place the blocks in various places in the arena, take a bunch of training pictures of them, and then train the classifier. It looks like there are multiple DNN classifer algorithms that TensorFlow can use. The SSD Mobilenet algorithm seems to run faster than the others, and is designed for low-powered devices, so that's probably a good place to start. Once the classifier file is loaded onto the Pi, actually using it seems fairly simple. You can load it using the TensorFlow Python library, then take an image from the camera, and pass that to the classifier. The classifier will then return an array of box coordinates, drawn around each instance of the object it found in that frame, along with a confidence percentage. If we want the robot to turn toward an object, we could turn the robot in a circle a few degrees at a time, and take a camera picture after each turn, then run the classifier on that picture. If the object is found in the circle, we could keep turning toward it until the robot is facing directly toward the object. Then, we could determine the distance to the object by the relative size of its bounding box. I'm not sure if we could determine the orientation to the object easily using these tools. For example, I don't know if these classification algorithms could tell us whether the robot is facing the corner of the green box, or its side. -Colin Hello everyone, my name is Joey Ferreri. It is unfortunate that PiWars will not be held in person this year, but we are still excited to participate this year in PiWars at home. Our team is comprised of both veteran PiWars goers and newbies. This year we will continue iterating on CNM Hackerspace’s HAL robot that we have incrementally upgraded for the past several years. To get everyone warmed up and prepare to work on the real deal, we’ve been using Dexter Industries’ GoPiGo3 robots for programming and practicing with various sensors as well. Specifically, we have been orientating ourselves with the distance sensor, light and color sensor, and the camera, all of which will aid us in autonomous completion of the challenges. We are hoping to all acquire the skills to develop our robot to its best iteration yet. Here’s to a great PiWars at home! Here is the main code for the distance sensor on my Dexter robot: from easygopigo3 import EasyGoPiGo3 import easygopigo3 as easy import time gpg = easy.EasyGoPiGo3() distance = gpg.init_distance_sensor() # create an EasyGoPiGo3 object gpg3_obj = EasyGoPiGo3() # and now let's instantiate a Servo object through the gpg3_obj object # this will bind a servo to port "SERVO1" servo = gpg3_obj.init_servo() def goOrNo(gpg, distance): leftAngle = 150 rightAngle = 30 centerAngle = 90 leftDist = -1 rightDist = -1 print("scanning") servo.rotate_servo(leftAngle) time.sleep(.5) leftDist = distance.read_mm() servo.rotate_servo(rightAngle) time.sleep(.8) rightDist = distance.read_mm() servo.rotate_servo(centerAngle) if leftDist > rightDist: gpg.turn_degrees(-70) else: gpg.turn_degrees(70) print("route found") tooClose = 100 run = True center = 90 servo.rotate_servo(center) while run == True: d = distance.read_mm() print(d) gpg.forward() time.sleep(.2) if d < tooClose: print("too close") gpg.drive_cm(-50) goOrNo(gpg, distance) This program allows autonomous function of the robot. It drives until the value from the distance sensor goes below a certain value, then backs up a predetermined amount and checks its surroundings. it looks left, then right and stores the value of each side and turns to whichever side has a greater distance value. |

AuthorCNM HackerSpace Robotics Archives

December 2020

Categories |

- Home

-

My IT Instructor

-

Student Resources

>

-

CNM Courses

>

- CNM - Cisco Self Enroll Courses

- CIS-1410 IT Essentials Hardware

- CIS-1415 Network Essentials

- CIS-1605 Internet of Things

- CIS-1610 IT Essentials Software

- CIS 1696 Introduction to Competitive Robotics

- CIS-2620 Configuring Windows Server

- CIS-2636 Cloud Computing

- CIS-2650 Advanced Windows Server

- CIS-2670 Computer Security+

-

CNM Courses

>

- Instructor Resources >

- NCTC

- Let's Get Social

- Books I Recommend

-

Student Resources

>

- WASTC

- #PiWars Blogs

- Pi IT Up!

- CNM HackerSpace

- Robotics Resources

- Workshop Information

- Home

-

My IT Instructor

-

Student Resources

>

-

CNM Courses

>

- CNM - Cisco Self Enroll Courses

- CIS-1410 IT Essentials Hardware

- CIS-1415 Network Essentials

- CIS-1605 Internet of Things

- CIS-1610 IT Essentials Software

- CIS 1696 Introduction to Competitive Robotics

- CIS-2620 Configuring Windows Server

- CIS-2636 Cloud Computing

- CIS-2650 Advanced Windows Server

- CIS-2670 Computer Security+

-

CNM Courses

>

- Instructor Resources >

- NCTC

- Let's Get Social

- Books I Recommend

-

Student Resources

>

- WASTC

- #PiWars Blogs

- Pi IT Up!

- CNM HackerSpace

- Robotics Resources

- Workshop Information

RSS Feed

RSS Feed