|

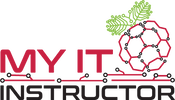

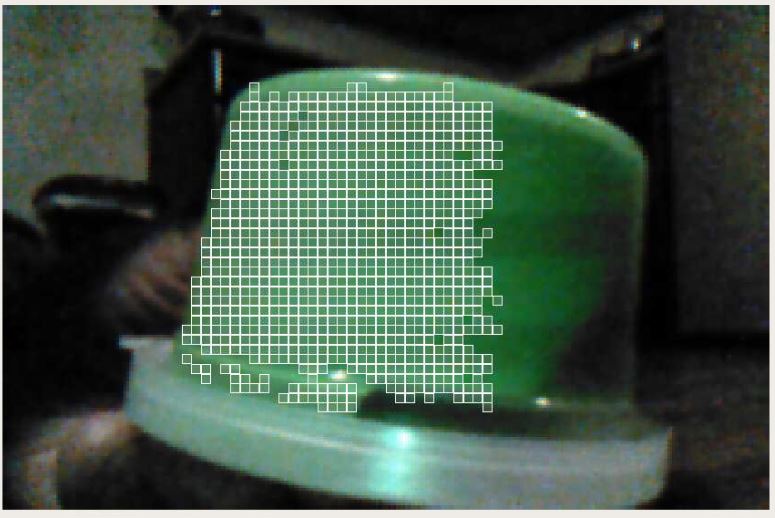

The Pixy2 uses color to recognize objects, and returns the coordinates of a bounding box around those objects, similar to the TensorFlow algorithms. In the Pi Wars challenges, the toy blocks and fish tank should be distinctly colored objects against a non-colored arena wall, so the Pixy2 seems like a promising option. The Pixy2 is trained by holding a colored object in front of the camera. The camera uses a region growing algorithm to find the connected pixels in the image that make up the object in front of the camera. It seems to use the dominant color of the object to decide which pixels are part of the object. The more pixels that the Pixy2 is able to detect, the more accurate its understanding of the object's shape will be. Once the Pixy2 has been "trained" to detect the object, it can then identify the same object in front of it. This is where the big limitation of the Pixy2 appears. Color is inherently tied to light. The Pixy2 seems to have poor light sensitivity in general, which makes it difficult to use in rooms that aren't well lit. Even when the object is still clearly visible in the camera's image, it's not always detected. Why? Because the difference in lighting has changed the saturation of the object's color, such that the object no longer matches the color that the Pixy2 was trained to recognize. Adding more light can cause a similar problem. Will this make the Pixy2 unusable? Possibly. We plan to run the challenges in a well lit space, with relatively consistent lighting. Hopefully the combination of consistent lighting and consistent arena walls will mitigate these problems.

-Colin Comments are closed.

|

AuthorCNM HackerSpace Robotics Archives

December 2020

Categories |

- Home

-

My IT Instructor

-

Student Resources

>

-

CNM Courses

>

- CNM - Cisco Self Enroll Courses

- CIS-1410 IT Essentials Hardware

- CIS-1415 Network Essentials

- CIS-1605 Internet of Things

- CIS-1610 IT Essentials Software

- CIS 1696 Introduction to Competitive Robotics

- CIS-2620 Configuring Windows Server

- CIS-2636 Cloud Computing

- CIS-2650 Advanced Windows Server

- CIS-2670 Computer Security+

-

CNM Courses

>

- Instructor Resources >

- NCTC

- Let's Get Social

- Books I Recommend

-

Student Resources

>

- WASTC

- #PiWars Blogs

- Pi IT Up!

- CNM HackerSpace

- Robotics Resources

- Workshop Information

- Home

-

My IT Instructor

-

Student Resources

>

-

CNM Courses

>

- CNM - Cisco Self Enroll Courses

- CIS-1410 IT Essentials Hardware

- CIS-1415 Network Essentials

- CIS-1605 Internet of Things

- CIS-1610 IT Essentials Software

- CIS 1696 Introduction to Competitive Robotics

- CIS-2620 Configuring Windows Server

- CIS-2636 Cloud Computing

- CIS-2650 Advanced Windows Server

- CIS-2670 Computer Security+

-

CNM Courses

>

- Instructor Resources >

- NCTC

- Let's Get Social

- Books I Recommend

-

Student Resources

>

- WASTC

- #PiWars Blogs

- Pi IT Up!

- CNM HackerSpace

- Robotics Resources

- Workshop Information

RSS Feed

RSS Feed